HPE SmartMemory DIMMs and HPE NVDIMM-Ns may be populated in many permutations that are allowed but may not provide optimal performance. The system ROM reports a message during the power on self-test if the population is not supported or is not balanced.

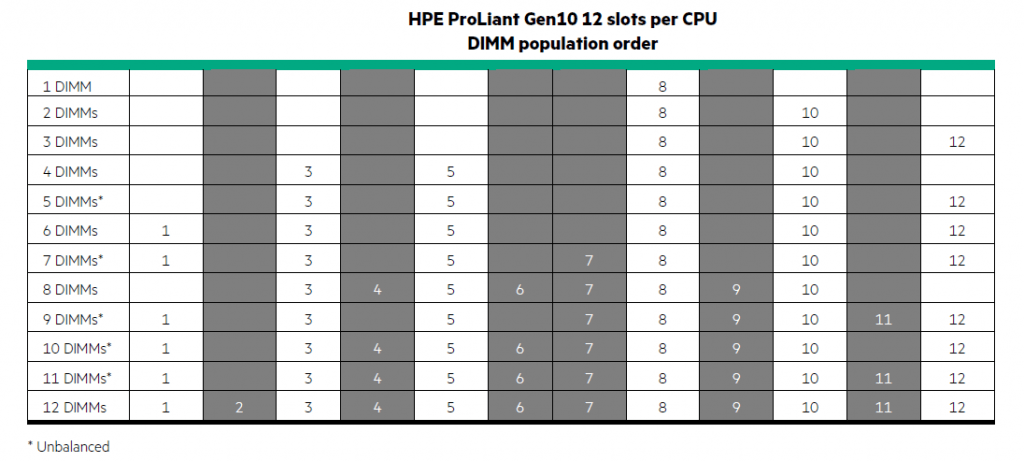

Table 2 shows the population guidelines for HPE SmartMemory DIMMs in HPE Gen10 servers with twelve DIMM slots per CPU (e.g., HPE ProLiant DL360, DL380, DL560, DL580, and ML350 Gen10 servers and HPE Synergy 480 and 660 Gen10 compute modules).

For a given number of HPE SmartMemory DIMMs per CPU, populate those DIMMs in the corresponding numbered DIMM slot(s) on the corresponding row.

As shown in Table 2, memory should be installed as indicated based upon the total number of DIMMs being installed per CPU. For example:

• If two HPE SmartMemory DIMMs are being installed per CPU, they should be installed in DIMM slots 8 and 10.

• If six HPE SmartMemory DIMMs are being installed per CPU, they should be installed in DIMM slots 1, 3, 5, 8, 10, and 12.

Unbalanced configurations are noted with an asterisk and may not provide optimal performance. This is because memory performance maybe inconsistent and reduced compared to balanced configurations. Although the eight-DIMM configuration is balanced, it provides 33% less bandwidth than the six-DIMM configuration because it does not use all channels. Other configurations (such as the 11-DIMM configuration) will provide maximum bandwidth in some address regions and less bandwidth in others. Applications that rely heavily on throughput will be most impacted by an unbalanced configuration. Other applications that rely more on memory capacity and less on throughput will be far less impacted by such a configuration.

Table 3 shows the population guidelines for HPE SmartMemory DIMMs in HPE Gen10 servers with eight DIMM slots per CPU (e.g.,

HPE ProLiant BL460c Gen10 server blades and HPE ProLiant XL170r/XL190r/XL230k/XL450 Gen10 servers).

As shown in Table 3, memory should be installed as indicated based upon the total number of DIMMs being installed per CPU. For example:

• If two HPE SmartMemory DIMMs are being installed, they should be installed in DIMM slots 2 and 3.

• If six HPE SmartMemory DIMMs are being installed, they should be installed in DIMM slots 1, 2, 3, 6, 7, and 8.

Unbalanced configurations are noted with an asterisk. In these configurations, memory performance may be inconsistent or reduced compared to a balanced configuration.

Table 4 shows the population guidelines for HPE SmartMemory DIMMs in HPE Gen10 servers with six DIMM slots per CPU (e.g., HPE ProLiant ML110 Gen10 servers).

As shown in Table 4, memory should be installed as indicated based upon the total number of DIMMs being installed per CPU. For example:

• If two HPE SmartMemory DIMMs are being installed, they should be installed in DIMM slots 4 and 5.

• If four HPE SmartMemory DIMMs are being installed, they should be installed in DIMM slots 2, 3, 4, and 5.

Unbalanced configurations are noted with an asterisk. In these configurations, memory performance may be inconsistent or reduced compared to a balanced configuration.